Estimated Reading Time: 4 min

Optimizing your WordPress robots.txt file for SEO is a critical step to ensure search engines crawl and index the most important parts of your website while avoiding unnecessary or sensitive areas. Here’s a comprehensive guide to optimize your robots.txt for SEO:

1. Understand the Purpose of Robots.txt

The robots.txt file is used to guide search engine crawlers on what to crawl and index on your site. It helps:

- Prevent indexing of sensitive or irrelevant areas.

- Optimize crawl budget by directing crawlers to priority pages.

- Avoid duplicate content issues.

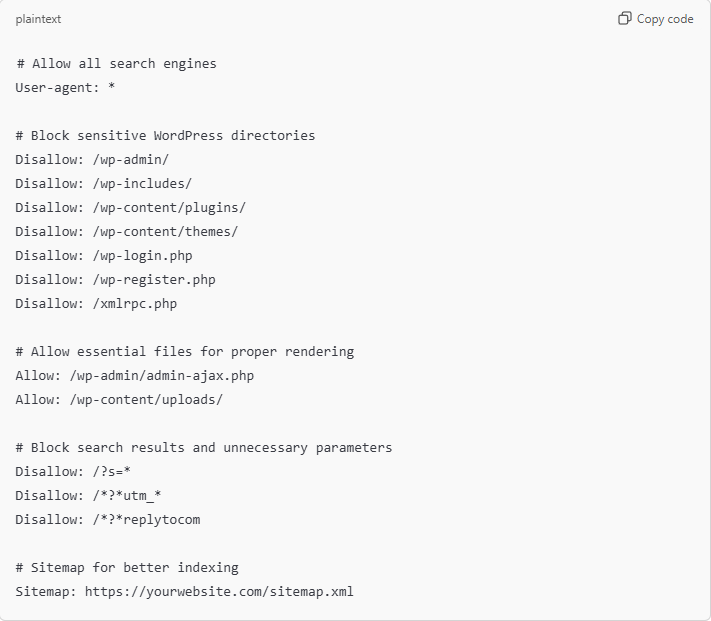

2. Create an SEO-Friendly Robots.txt File

# Allow all search engines

User-agent: *

# Block sensitive WordPress directories

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /wp-content/plugins/

Disallow: /wp-content/themes/

Disallow: /wp-login.php

Disallow: /wp-register.php

Disallow: /xmlrpc.php

# Allow essential files for proper rendering

Allow: /wp-admin/admin-ajax.php

Allow: /wp-content/uploads/

# Block search results and unnecessary parameters

Disallow: /?s=*

Disallow: /*?*utm_*

Disallow: /*?*replytocom

# Sitemap for better indexing

Sitemap: https://yourwebsite.com/sitemap.xml

3. Key Rules to Include

- Block Unnecessary Areas:

/wp-admin/: Contains backend admin files./wp-includes/: Core WordPress files that don’t need indexing./wp-content/plugins/and/wp-content/themes/: Plugin and theme files don’t provide useful content for search engines.

- Allow Important Directories:

- Uploads Directory: Ensure that

/wp-content/uploads/is crawlable so images, PDFs, and other media files can be indexed.

- Uploads Directory: Ensure that

- Avoid Duplicate Content:

- Block parameters like

?s=(search results),?replytocom(comment replies), and?utm_*(tracking codes).

- Block parameters like

- Sitemap Declaration:

- Specify your sitemap URL to make it easier for search engines to discover your content.

- Test and Validate:

- Use Google Search Console’s Robots.txt Tester to ensure your file works as expected.

4. Why Optimize Robots.txt for SEO?

- Control Crawl Budget:

- Search engines have a limited crawl budget for your site. By blocking unnecessary pages, you ensure they focus on indexing your important content.

- Prevent Indexing of Sensitive Data:

- Blocking admin files, login pages, and other sensitive URLs helps improve security and keeps irrelevant data out of search results.

- Enhance User Experience:

- Ensures search engines index the best content, leading to better search visibility and user experience.

5. Tips for Advanced SEO Optimization

- Customize for Specific Bots:

- Create rules for specific crawlers (e.g., Googlebot, BingBot) if needed:

User-agent: Googlebot Disallow: /private-folder/

- Create rules for specific crawlers (e.g., Googlebot, BingBot) if needed:

- Leverage Allow Rules:

- Explicitly allow critical assets like CSS, JS, and images:

Allow: /wp-content/themes/theme-name/style.css

- Explicitly allow critical assets like CSS, JS, and images:

- Avoid Overblocking:

- Blocking critical resources (like JavaScript or CSS) may prevent Google from rendering your site properly.

- Redirect Old Pages:

- Use the

Disallowdirective for obsolete pages combined with 301 redirects to maintain SEO value.

- Use the

6. Best Practices for Robots.txt SEO

- Use SEO Plugins:

- Plugins like Yoast SEO or Rank Math allow easy editing and management of your

robots.txtfile directly from the WordPress dashboard.

- Plugins like Yoast SEO or Rank Math allow easy editing and management of your

- Regularly Update:

- Adjust your

robots.txtrules as your site evolves (e.g., new pages, features, or plugins).

- Adjust your

- Monitor Crawl Errors:

- Use Google Search Console to identify crawl errors caused by improper rules.

- Test Before Publishing:

- Always test your

robots.txtfile with the Google Robots Testing Tool to avoid blocking essential pages unintentionally.

- Always test your

7. Common Mistakes to Avoid

- Blocking All Bots:

User-agent: * Disallow: /This will stop all crawlers from indexing your site, which is bad for SEO. - Blocking Media Files:

- Prevents images from appearing in Google Image Search:

Disallow: /wp-content/uploads/

- Prevents images from appearing in Google Image Search:

- Not Declaring a Sitemap:

- Forgetting to add the sitemap URL reduces indexing efficiency.

Final Checklist for Robots.txt Optimization

- Sensitive directories are disallowed.

- Essential resources like

/uploads/are allowed. - Duplicate content parameters are blocked.

- Sitemap is declared.

- Tested and validated using Google’s tools.

By following these steps, you can ensure that your WordPress robots.txt file is optimized for SEO, improving crawl efficiency and maximizing your site’s search visibility.

Best Practices:

- Test Your Robots.txt: Use the Google Search Console Robots Testing Tool to verify your rules.

- Keep It Updated: Update the

robots.txtfile if your site structure changes or new directories need to be blocked. - Avoid Over-Blocking: Don’t block resources like

CSSorJavaScriptfiles unless necessary, as they’re required for rendering your site correctly in search engines.

This file is beginner-friendly, secure, and ensures smooth crawling and indexing by search engines!